11/26/2019

The transcription industry has evolved a lot over the past 10 years. Academic and Healthcare firms remain the largest transcription customers.

However, other industries such as financial, legal, manufacturing, and education also make up a significant percentage of the customer base.

Automatic Speech Recognition (ASR) software have made our daily routines more convenient. For instance, Alexa can now tell you how the weather will look like today.

Perhaps like most industries, transcription industry has been affected by ASR. This software are increasingly being used by various players that require transcripts.

ASR is a cheap transcription solution. However, there is a big problem with the accuracy of ASR transcripts.

According to research comparing the accuracy rates of human transcriptionists and ASR software, human transcriptionists had an error rate of about 4% while commercially available ASR transcription software’s error rate was found to be 12%.

In a nutshell, the error rate of ASR is three times as bad as that of humans.

In 2017, Google announced that its voice recognition software had attained a Word Error Rate (WER) of about 4.7%. Is it really possible?

Let’s understand how ASR works and what are its implications in our transcription and translation industry.

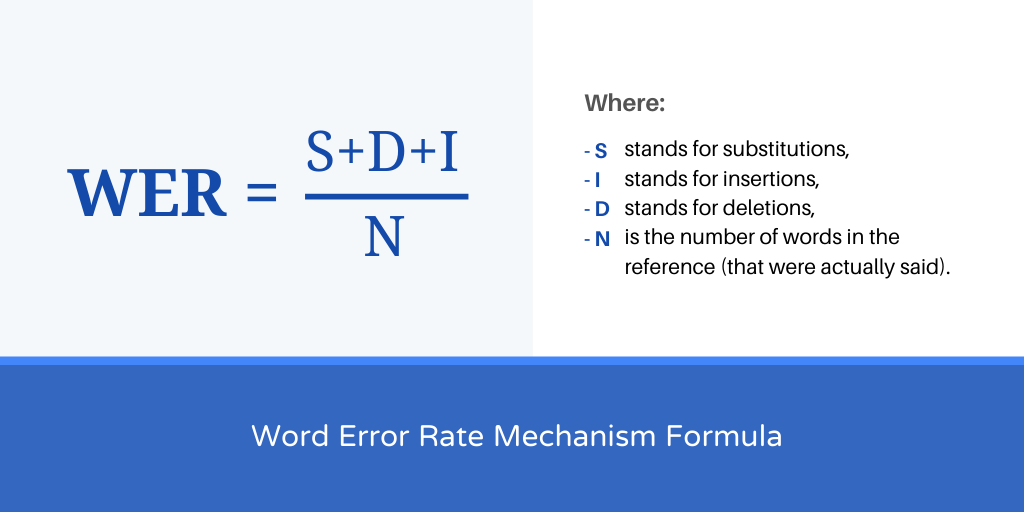

Word Error Rate (WER) is a common metric used to compare the accuracy of the transcripts produced by speech recognition APIs.

Here is a simple formula to understand how Word Error Rate (WER) is calculated:

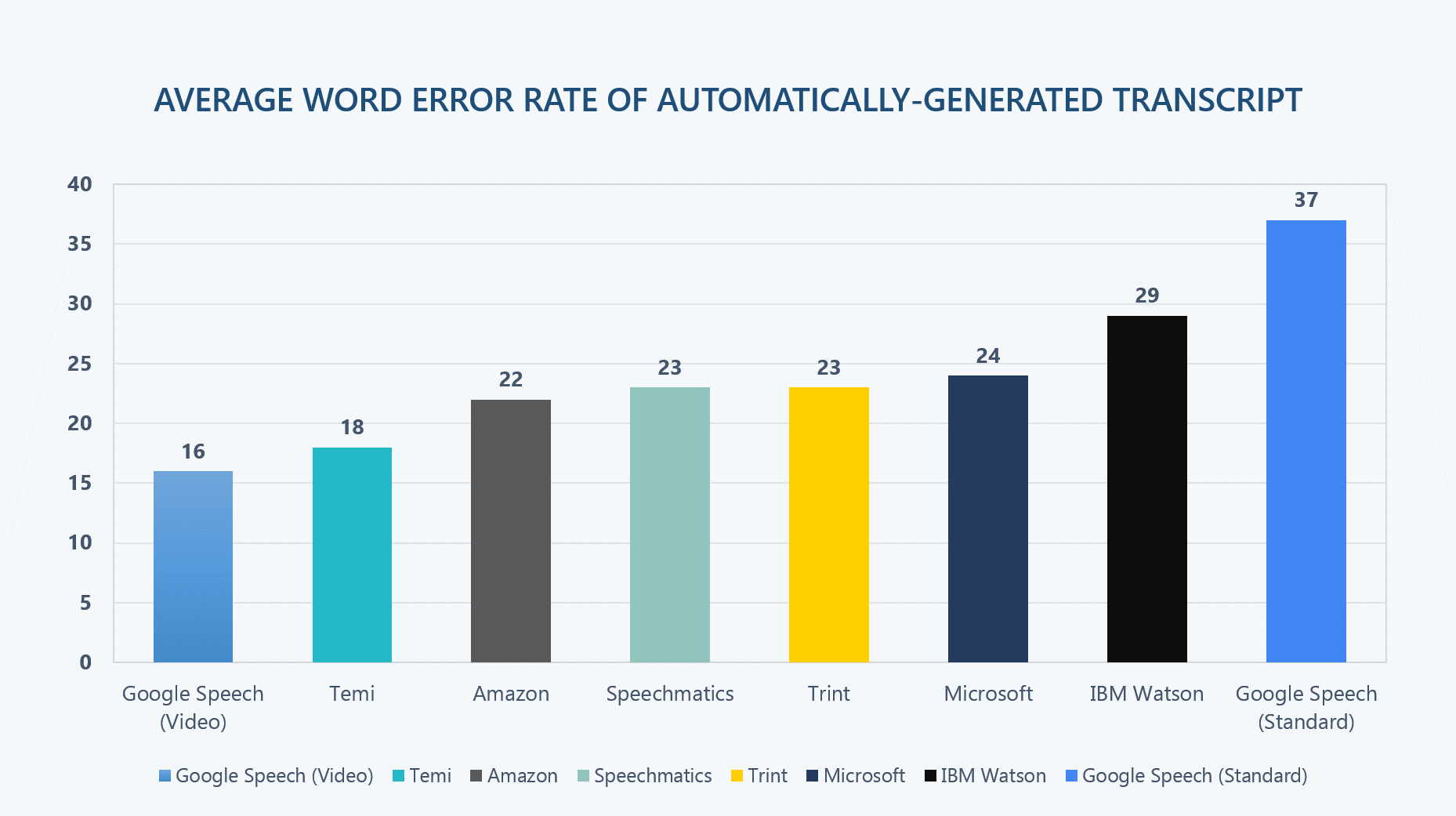

For speech recognition APIs like IBM Watson and Google Speech, a 25%-word error rate is about average for regular speech recognition.

If the speech data is more technical, more “accented”, more industry-specific, and noisier, it becomes less likely that a general speech recognition API (or humans) will be more accurate.

Human transcriptionists charge more for technical and industry-specific language, and there’s a reason for it.

Reliably recognizing industry terms is complex and does take effort. Due to this, speech recognition systems trained on “average” data are found struggling with more specialized words.

What is construed as a strong accent in Dublin is normal in New York. Large companies like Google, Nuance and IBM have built speech recognition systems which are very familiar with “General American English” and British English.

However, they may not be familiar with the different accents and dialects of English spoken in different cities around the world..

Noisy audio and background noise is unwelcome but is common in many audio files. People rarely make business calls to us from a sound studio, or using VoIP and traditional phone systems compress audio that cut off many frequencies and add noise artifacts.

Enterprise ASR software is built to understand a given accent and a limited number of words.

For example, with some large companies their ASR software can recognize the National Switchboard Corpus, which is a popular database of words used in phone calls conversations that have already been transcribed.

Unfortunately, in the real world, audio files are different. For example, they may feature speakers with different accents or speaking different languages.

Also, most ASR software use WER to measure transcription errors. This measure has its shortfalls, such as:

Researchers from leading companies like Google, Baidu, IBM, and Microsoft have been racing towards achieving the lowest-ever Word Error Rates from their speech recognition engines that has yielded remarkable results.

Gaining momentum from advances in neural networks and massive datasets compiled by them, WERs have improved to the extent of grabbing headlines about matching or even surpassing human efficiency.

Microsoft researchers, in contrast, report that their ASR engine has a WER of 5.1%, while for IBM Watson, it is 5.5%, and Google claims an error rate of just 4.9% (info source).

However, these tests were conducted by using a common set of audio recordings, i.e., a corpus called Switchboard, which consists of a large number of recordings of phone conversations covering a broad array of topics.

Switchboard is a reasonable choice, as it has been used in the field for many years and is nearly ubiquitous in the current literature.

Also, by testing against the audio corpus (database of speech audio files), researchers are able to make comparisons between themselves and competitors.

Google is the lone exception, as it uses its own, internal test corpus (large structured set of texts).

This type of testing leads is limited as the claims of surpassing human transcriptionists are based on a very specific kind of audio.

However, audio isn’t perfect or consistent and has many variables, and all of them can have a significant impact on transcription accuracy.

Also Read: New Research: Cozy Offices Mean Less Typos, More Accuracy

ASR transcription accuracy rates don’t come close to the accuracy of human transcriptionists.

ASR transcription is also affected by crosstalk, accents, background noise in the audio, and unknown words. In such instances, the accuracy will be poorer.

If you want to use ASR for transcription, be prepared to deal with:

If you chose ASR transcription because it’s cheap, you will get low-quality transcripts full of errors. Such a transcript can cost you your business, money and even customers.

Here are two examples of businesses that had to pay dearly for machine-made transcription errors.

In 2006, Alitalia Airlines offered business class flights to its customers at a subsidized price of $39 compared to their usual $3900 price.

Unknown to the customers, a copy-paste error had been made and the subsidized price was a mistake.

More than 2000 customers had already booked the flight by the time the error was corrected.

The customers wouldn’t accept the cancellation of their purchased tickets, and the airline had no choice but to reduce its prices leading to a loss of more than $7.2 million.

Another company, Fidelity Magellan Fund, had to cancel its dividend distribution when a transcription error saw it posting a capital gain of $1.3 billion rather than a loss of a similar amount.

The transcription had omitted the negative sign causing the dividend estimate to be higher by $2.6million.

ASR transcription may be cheaper than human transcription. However, its errors can be costly.

When you want accurate transcripts, human transcriptions are still the best option.

What is the way forward? Should you transition to automatic transcription or stay with a reliable manual Transcription services provider?

Automatic transcription is fast and will save you time when you are on a deadline. However, in almost all cases, the transcripts will have to be brushed up for accuracy by professional transcriptionists.

Trained human transcriptionists can accurately identify complex terminology, accents, different dialects, and the presence of multiple speakers.

The type of project should help you determine what form of transcription is best for you.

If you are looking for highly accurate transcripts or work in a specialized industry like legal, academia, or medical, then working with a transcription company specialized in human transcription will be your best option.

Unlike our peers, who have moved towards automated technology to gain a competitive cost advantage and maximize profits at the cost of accuracy, we stand our ground by only employing US-based human transcriptionist to whom we can trust for quality and confidentiality.

Our clients generally belong to different niche like legal, academic, businesses and more where quality matters above all.

We have an unwavering commitment to client satisfaction, rather than mere concern for profit.